Description

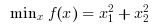

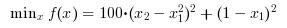

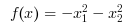

Search the minimum of an unconstrained optimization problem specified by :

Find the minimum of f(x) such that

Fminunc calls Ipopt which is an optimization library written in C++, to solve the unconstrained optimization problem.

Options

The options allow the user to set various parameters of the optimization problem. The syntax for the options is given by:options= list("MaxIter", [---], "CpuTime", [---], "GradObj", ---, "Hessian", ---, "GradCon", ---);

- MaxIter : A Scalar, specifying the Maximum Number of Iterations that the solver should take.

- CpuTime : A Scalar, specifying the Maximum amount of CPU Time in seconds that the solver should take.

- Gradient: A function, representing the gradient function of the objective in Vector Form.

- Hessian : A function, representing the hessian function of the lagrange in the form of a Symmetric Matrix with input parameters as x, objective factor and lambda. Refer to Example 5 for definition of lagrangian hessian function.

options = list("MaxIter", [3000], "CpuTime", [600]);

The exitflag allows the user to know the status of the optimization which is returned by Ipopt. The values it can take and what they indicate is described below:

- 0 : Optimal Solution Found

- 1 : Maximum Number of Iterations Exceeded. Output may not be optimal.

- 2 : Maximum amount of CPU Time exceeded. Output may not be optimal.

- 3 : Stop at Tiny Step.

- 4 : Solved To Acceptable Level.

- 5 : Converged to a point of local infeasibility.

For more details on exitflag, see the Ipopt documentation which can be found on http://www.coin-or.org/Ipopt/documentation/

The output data structure contains detailed information about the optimization process. It is of type "struct" and contains the following fields.

- output.Iterations: The number of iterations performed.

- output.Cpu_Time : The total cpu-time taken.

- output.Objective_Evaluation: The number of objective evaluations performed.

- output.Dual_Infeasibility : The Dual Infeasiblity of the final soution.

- output.Message: The output message for the problem.